What the Biden Administration’s New Executive Order on AI Will Mean for Cybersecurity

We analyzed the Biden administration's 48-page executive order on artificial intelligence to determine its impact on cybersecurity.

Regulations have been proposed by a presidential administration committed to a responsible rollout of one of the most consequential technologies since the advent of the internet.

The adoption of AI products accelerated rapidly over the past year since OpenAI released its large language model-powered chatbot, ChatGPT. Today, the generative AI platform boasts more than 100 million weekly users worldwide and is used by developers at 9 in 10 Fortune 500 companies.

In that time, academics, researchers, and even the CEOs of companies producing AI tools themselves have called for guidance and responsible safeguards for AI.

Among their concerns are the displacement of workers; violations of copyright law; furthering wealth inequality in financial services, as well as dissemination of discrimination and misinformation; and national security in a world where other global powers have access to AI as well.

Drata reviewed the Biden administration's 48-page executive order on AI and analyses from law firms and researchers to identify the proposals most likely to affect U.S. cybersecurity.

The order has been described as "sweeping" by academics because it proposes regulations for using AI in the federal government and managing risks to privacy, consumer protections, national security, and civil and human rights in both the public and private sectors.

It’s important to note that the order serves mainly as a ‘plan for making plans’ so we will learn more as the individual regulations are drafted.

The Biden administration said it not only wants to prevent harm but promote a responsible development of AI tools that will keep the U.S. at the forefront of what's been dubbed the "AI arms race."

On that front, the order aims to "maximize the benefits of AI" for working Americans, expand grant funds for AI research, attract workers who can work on advanced AI systems, and hire AI professionals into federal agencies.

Perhaps one of the most impactful elements of the order from a cybersecurity perspective is a call to use AI to find and fix vulnerabilities in infrastructure. This type of technology is powerful in the hands of security professionals, but equally as powerful in the hands of an attacker. This type of use case is where you can immediately see the “AI arms race” take shape.

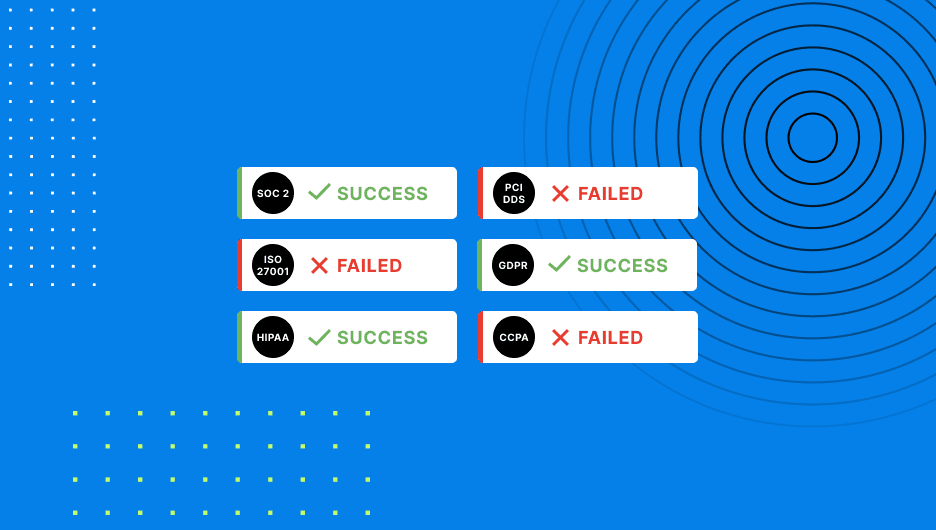

THE FASTEST PATH TO SOC 2 COMPLIANCE

With a team of experts and step-by-step instructions, achieving SOC 2 compliance has never been easier.

A Benchmarking Initiative From the Departments of Commerce and Energy

The Department of Commerce is the federal agency implementing and overseeing many of the executive order's new rules and regulations. The department’s National Institute of Standards and Technology released its Artificial Intelligence risk management framework in January 2023 for developing and deploying AI systems responsibly within the government, which can be used voluntarily by the private sector.

The executive order has given the Department of Commerce nearly a year to expand on its NIST framework and create a version for generative AI products like ChatGPT and Google's Gemini model. The order will also launch an initiative to create standards for how companies and the government should audit AI systems to determine cybersecurity risks.

Training Record Transparency

The Department of Commerce will also require private companies to turn over to the government records from AI training processes for advanced models considered "dual-use foundation models." AI is developed by feeding an algorithm large amounts of data, which it can learn from, cite when answering prompts, and use to generate media or perform a task.

The administration's executive order defines "dual-use" as an AI model "trained on broad data; generally uses self-supervision; contains at least tens of billions of parameters; is applicable across a wide range of contexts; and that exhibits, or could be easily modified to exhibit, high levels of performance at tasks that pose a serious risk to security, national economic security, national public health or safety, or any combination of those matters."

The executive order states that the government is particularly concerned with how those models might make it easier to develop weapons of mass destruction, enable "powerful" offensive cyberwarfare capabilities, and become difficult for humans to control once unleashed.

REDUCE GDPR COMPLEXITY

Mitigate business risk and reduce complexity with a complete GDPR control library and a team of experts.

Annual CISA Risk Reports

Importantly, the order also lays the groundwork for protecting critical infrastructure around the country from cyberattacks via vulnerabilities in AI models. Any agency overseeing critical infrastructure, like the Department of Energy, will be required to meet with the Cybersecurity and Infrastructure Security Agency director at least once a year to report risks posed by AI.

Treasury Department Best Practices for Managing Cybersecurity in Financial Institutions

The part of the order focused on risk reporting with CISA also requires the Treasury Department to pull together and share best practices for financial institutions trying to mitigate cybersecurity risks posed by AI systems.

In an August 2023 report, the International Monetary Fund found that AI models pose an inherent risk to customers' data privacy, civil rights, and financial security. Financial institutions like banks are vulnerable to phishing attacks and identity theft that could become much more sophisticated and aggressive with the adoption of generative AI.

The report also detailed the risks posed by institutions using AI to do things previously performed by humans, like profiling them for risk before lending them money, which could result in discriminatory lending decisions.

Potential Regulations for Using AI in Health Care Settings

Over the next year, an AI task force made up of the secretaries of the Departments of Defense, Veterans Affairs, and of Health and Human Services will develop policies for the responsible deployment of AI in the health care sector.

It would include AI systems that might be used for new drug discovery, health care devices, public health initiatives, and care financing. The order instructs those department heads to propose regulatory action "as appropriate."

The order specifically calls out the need for guidance on how software security standards can be updated to protect personally identifiable information maintained by companies in the sector. The health care sector is one that stands to benefit greatly from the advancement of technology and is also among the most targeted by cyberattacks.

HIPAA COMPLIANCE AUTOMATION SOFTWARE

Save time managing HIPPA compliance with policies pre-mapped to controls and automated monitoring.

Incorporation of AI in Federal Government Operations With CISA Guidance

The federal government isn't planning to sit on the sidelines and merely set the rules for the rest of the players. It, too, is interested in deploying and using AI for its own operations.

The order explicitly discourages government agencies from "imposing broad general bans or blocks on agency use of generative AI." Instead, it encourages limiting their use based on risk assessments of each specific tool.

That will mean, in part, establishing a position at each agency known as the Chief AI Officer. Those heads and their agencies will be provided with recommendations by the Office of Management and Budget for navigating the implementation of AI responsibly while factoring in practical limitations like budgets, workforce training, and necessary cybersecurity processes.

Agencies will also receive recommendations for how to conduct "red teaming" on generative AI systems—the process of trying to exploit the weaknesses of a system in an adversarial way to learn how to defend it better.

See Third-Party Risk Trends

Get the 2023 Risk Trends Report to learn trends and pressing issues surrounding third-party risk and processes to manage it.